Ah, what a week already and it's not over! First, Oracle released

Oracle XE 18c (as I

wrote on Sunday) and yesterday (October 22-nd)

MySQL 8.0.13 arrived along with (as already usual) the whole feet (Router, Shell, Workbench and C++/J/ODBC/NET/Python/Node.js Connectors). I was eager to read the change log and as the refereed article from MySQL's Server Blog also suggests there are some interesting new features for designers and developers. Here below are my picks and few words on upgrading from 8.0.12.

SQL Default Values now could be also function or expression

It's now possible to have default values as function or expression. This is an important new feature. Before only literal values were allowed, which was quite limiting. The removal of this limitation means that it is now possible:

- to generate UUID values by default (by using

DEFAULT (uuid_to_bin(uuid())) for example);

- to generate default values for geometry data types (by using

DEFAULT (POINT(0,0)) or DEFAULT (ST_PointFromText('POINT(42.69751 23.32415)', 4326)) for example);

- to generate default values for JSON columns (by using DEFAULT (JSON_ARRAY()) or DEFAULT ('[]') for example); and

- other complex values (like DEFAULT (CURRENT_DATE + INTERVAL 1 DAY) or DEFAULT (PI() * POW(r, 2)) for example) using function or functions in an expression.

Remark: Please, note the parentheses surrounding the expression in DEFAULT (see

Handling of Explicit Defaults as of MySQL 8.0.13 in the manual). MySQL Workbench 8.0.13 again has some problems parsing the new syntax (see screenshot below), so I opened bug

92900 and it already got verified:

I tried the new functionality with the following:

CREATE TABLE def_expr (

id INT NOT NULL AUTO_INCREMENT,

uuid_def BINARY(16) DEFAULT (uuid_to_bin(uuid())),

geo_def POINT DEFAULT (Point(0,0)),

geo_def2 GEOMETRY DEFAULT (ST_PointFromText('POINT(42.69751 23.32415)', 4326)),

json_def JSON DEFAULT (JSON_ARRAY()),

json_def2 JSON DEFAULT ('[]') /* this works too */,

tomorrow DATE DEFAULT (CURDATE() + INTERVAL 1 DAY),

radius INT DEFAULT (FLOOR(1 + (RAND() * 10))),

area DECIMAL(10,3) DEFAULT (ROUND(PI() * POW(radius, 2), 3)),

PRIMARY KEY (id),

UNIQUE INDEX id_UNIQUE (id ASC) VISIBLE

);

Then inserted two dummy lines and got the following results:

INSERT INTO def_expr VALUES (); /* x 2 */

SELECT id,

bin_to_uuid(uuid_def) uuid_def,

ST_AsText(geo_def) geo_def,

ST_AsText(geo_def2) geo_def2,

json_def, json_def2,

tomorrow, radius, area

FROM def_expr;

+----+--------------------------------------+------------+--------------------------+----------+-----------+------------+--------+---------+

| id | uuid_def | geo_def | geo_def2 | json_def | json_def2 | tomorrow | radius | area |

+----+--------------------------------------+------------+--------------------------+----------+-----------+------------+--------+---------+

| 1 | a2747ee0-d6ee-11e8-b02e-02004c4f4f50 | POINT(0 0) | POINT(42.69751 23.32415) | [] | [] | 2018-10-24 | 2 | 12.566 |

| 2 | a2ff920b-d6ee-11e8-b02e-02004c4f4f50 | POINT(0 0) | POINT(42.69751 23.32415) | [] | [] | 2018-10-24 | 10 | 314.159 |

+----+--------------------------------------+------------+--------------------------+----------+-----------+------------+--------+---------+

2 rows in set (0.0021 sec)

That's cool, right? I'm eager to use it in my projects.

SQL Functional Indexes

It's now possible to have

functional indexes (see

Functional Key Parts in the manual), which means that the index is build from expression values, instead of column values or column prefix values. In other words you could index values not stored in the table, which I think could be quite powerful in some cases. In composite indexes with multiple key parts you could have mix of functional and nonfunctional (regular) key parts. Functional key parts are implemented as hidden

virtual generated columns (see

CREATE TABLE and Generated Columns in the manual) available since MySQL 5.7.6 (from 2015-03-09). This also means that functional key parts have the same restrictions (i.e. deterministic built-in functions and operators are permitted, but subqueries, parameters, variables, stored and user-defined functions are not permitted).

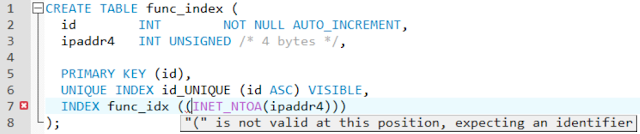

To try the new functionality I though that

IP addresses are usually stored as string in databases and then parsed, but the address could be represented as an (unsigned) integer value, which requires just 4 bytes. So I used the following:

CREATE TABLE func_index (

id INT NOT NULL AUTO_INCREMENT,

ipaddr4 INT UNSIGNED /* 4 bytes */,

PRIMARY KEY (id),

UNIQUE INDEX id_UNIQUE (id ASC) VISIBLE,

INDEX func_idx ((INET_NTOA(ipaddr4)))

);

Remark: Please, note the parentheses surrounding the expression. Without them there would be цоде 1064 (syntax error). Not surprisingly MySQL Workbench 8.0.13 wasn't ready for the new syntax, so I opened bug

92908 and it also already got verified.

Then inserted a line and explained two SELECT queries below:

INSERT INTO func_index (ipaddr4) VALUES (INET_ATON('192.168.1.1'));

SELECT * FROM func_index WHERE ipaddr4 = INET_ATON('192.168.1.1');

SELECT * FROM func_index WHERE INET_NTOA(ipaddr4) = '192.168.1.1';

The first one would result in full table scan, while the second one would read the functional index func_idx. In order for the query to use the index it's important that the expression in the WHERE clause matches index's specification.

Option to Disallow Tables without a Primary Key

There is now the system variable

sql_require_primary_key, which prevents the creation or altering of tables without primary key if set. In such case

CREATE or

ALTER queries would now fail with error code 3750 (see also SQL state

ER_TABLE_WITHOUT_PK).

I tried this new possibility by setting the global variable in a session like this:

SET GLOBAL sql_require_primary_key = ON;

but I was able to create table without primary key. I have to check it again tomorrow and eventually report a bug. Setting the variable in my.ini and restarting the server worked just fine:

SQL> CREATE TABLE tab_no_pk (test INT);

ERROR: 3750: Unable to create a table without PK, when system variable 'sql_require_primary_key' is set. Add a PK to the table or unset this variable to avoid this message. Note that tables without PK can cause performance problems in row-based replication, so please consult your DBA before changing this setting.

I'll finally have a break from developers not familiar with relational concepts :-)

Geometry transformation

It's now possible to transform geometry from one spatial reference system (SRS) to another using built-in function

ST_Transform, which accepts as second argument the target SRS. I'm not that much into

GIS, so I didn't tested this one, but it seemed worth mentioning.

A few words on upgrading (from 8.0.12)

Upgrading my Windows installation was flawless with MySQL Installer for Windows. However, I had problem with my Linux installation, which consist of more databases. The server wasn't able to start and in the error log I found errors like the following:

2018-10-23T12:47:49.627732Z 1 [ERROR] [MY-013235] [Server] Error in parsing View 'db'.'aView' during upgrade. You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near 'desc' at line 1

I opened bug

92898 for this and the problem already got explained by support with an incompatible change (see

SQL Syntax Notes from the release notes). I'm pretty sure I've read about

ASC or

DESC qualifiers for

GROUP BY clauses being deprecated and I really do not remember why I used such syntax, but it got me off guard, so I had to update view definitions.